How to Avoid (and Fix) Organic Traffic Drop After a Website Redesign

Website redesign is an integral part of maintaining a website. Ideally, your website should be updated every four or five years. Carrying out a redesign is a great way to upgrade your user experience, freshen up your online presence, and implement new tools – if you do it right.

However, if you have some wrong moves, a website redesign can potentially harm your SEO and lead to a drop in traffic and lost sales. If these issues aren’t dealt with, it can have a lasting negative effect that’s difficult to recover from.

Discover some of the reasons your website traffic may decline after a redesign and what you can do about them.

Will my website’s traffic drop after a website redesign?

In some cases, a well-done website redesign won’t lead to a traffic drop. In fact, you may even see an increase in traffic. In our experience, traffic loss after a redesign may occur due to specific page removals, which is sometimes inevitable.

That said, if you do experience a slight traffic drop after a redesign, it’s not a cause for alarm. Depending on the changes you made, this is sometimes normal and expected.

For example, when a website is redesigned, any new pages will need to be crawled and indexed. This takes time, so until the process is complete, you may see page traffic ebbing and flowing for a short period until Google has fully crawled and reindexed your site.

Also, if there were any 301 redirects implemented, it can take some time for the authority from previous pages to pass to the new pages. Typically, this takes anywhere from a few weeks to two or three months.

This is especially true if the pages carry a lot of authority, which is why we avoid migrating high-authority pages unless absolutely necessary.

Also a website redesign is a shakeup for search engines. In the first few days after a major change, you may see some traffic drop for certain keywords as Google adjusts. Usually, a traffic drop of less than 10% is normal and should recover within a few weeks.

These are all common — and temporary — causes for a traffic drop. But if you see a significant drop in traffic, or more importantly, conversions, without a common cause to pinpoint, it’s important to evaluate what redesign elements may have impacted your organic traffic and how you can fix them.

Most common reasons for a website traffic drop

Recognizing the causes of a traffic drop after a website redesign is the first step. Here are some common causes to consider:

Website domain change

Even a slight change in the domain will de-index the previous website and lead Google to index the new one.

What do we mean by slight? Removing “www.” from a domain that Google had previously indexed is enough to cause your website traffic to drop.

Google sees https://www.test.com and https://test.com as two completely different websites. This means that any authority, backlinks and keywords ranking on www.test.com will not be associated with test.com (unless you redirect www.test.com to test.com).

Site architecture change

The structure and URL of your website are important factors that affect how Google categorizes and indexes your site.

Website architecture is useful in organizing the content of the site so that both users and search engines can easily comprehend the content, context, and how each page relates to others.

Effective site architecture informs search engines about the nature of your website and helps them navigate and discover all the pages on your site.

Similarly, good site architecture aids users in understanding and locating content.

The ultimate objective of good site architecture is to organize the content hierarchically, with the primary topic on the homepage and sub-topics on lower levels.

Content changes

Content changes can also impact traffic. If you already had good traffic to your pages and you remove content from them, you may see a sudden website traffic drop.

Your content is one of the key factors in driving traffic to your pages. If you had good rankings prior to the redesign and removed them, Google reindexes the pages and sees that the content is no longer there. This can hurt your rankings, especially if they were high-authority pages.

If your website includes new copy and content, it’s important to have an SEO website redesign strategy in place to optimize for the target keywords before you start the redesign.

On-page optimization changes

Depending on how the content collection and migration were handled during the redesign, sometimes the on-page optimizations aren’t included or taken into account when migrating the content to the new site.

On-page optimization includes keyword targeting balanced with a positive user experience. The keywords should be in key elements of your site, including headings, main body copy, meta description tags, and title tags, all of which are signals Google uses to understand and rank web pages. If these aren’t optimized, Google may demote your site.

Technical changes

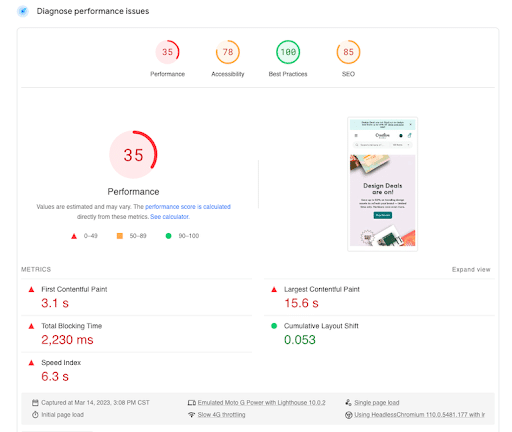

Technical issues can have a big impact on SEO and a sudden drop in traffic following a redesign. If, for example, your page has poor speed, reduced page performance, and poor mobile usability compared to the original website, you will likely see a drop in rankings.

As of mid-2021, Google added Core Web Vitals to incorporate a new page experience signal that considers site speed and responsiveness in ranking websites. Google has quantifiable user experience metrics: Largest Contentful Paint (LCP), which measures load times, and Cumulative Layout Shift (CLS), which measures visual stability.

If you’re considering redesigning your website, it’s crucial that you consider page performance and mobile usability in the process. At Glide, we make sure that our websites are redesigned with quality assurance from our technical lead specialists to ensure page speed and mobile responsiveness are not compromised by a beautiful, but less functional, website.

According to Google, errors that can prevent Google from crawling, indexing, or serving your pages to users include:

- Server availability

- Robots.txt fetching

- Sitemap issues

- Crawling issues

- Page not found

Any of these issues can occur site-wide, such as a website being down, or page-wide, such as a misplaced noindex tag. In either case, there may be a drop in traffic.

How to avoid losing traffic during a website redesign

Taking on a website redesign without losing traffic isn’t a DIY process. If you’re unfamiliar with the best practices to maintain SEO during a website redesign, it’s best to leverage the expertise of an SEO strategist during the initial stages of the website redesign process.

SEO shouldn’t be an afterthought with a website redesign. It’s arguably the most important goal, which is why it needs to be considered from the very start of a redesign project. Bringing in an SEO professional with experience in the website redesign process is essential to avoid a significant drop in traffic.

At GLIDE, here are the steps we take to ensure our client’s website traffic is not affected by the website redesign. But we don’t stop at neutral. Our goal is to have the website and conversions go up, rather than dropping or staying consistent.

1. Identify pages with organic traffic & ranking keywords

This should be one of your first steps before starting your website redesign. Identify and save the pages that are currently driving organic traffic to your website.

You should also identify and save pages that might not currently be driving lots of organic traffic but that are ranking for relevant keywords.

Doing this will provide insights on how to deal with any changes that may impact your most valuable pages.

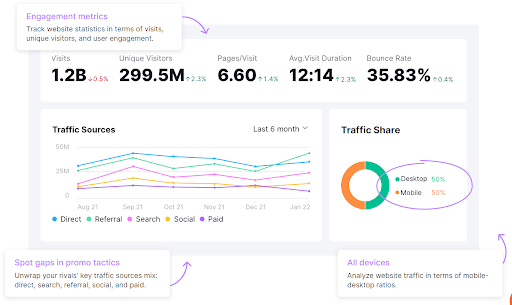

Tools like SEMRush, Ahrefs, Google Analytics, and Google Search Console can be used to analyze this information and plan for next steps.

2. Map current content on the pages

As you already know, content changes can be a significant source of traffic drops. Before the redesign, you should identify the pages that have organic traffic and evaluate the content on them.

Then, when the redesign is taking place, try to save content on the pages that already have a lot of visits. Any change in content on these pages can cause the keywords to change positions and lose rankings.

You can extract current keywords from Google Search Console for the specific pages to ensure that your new pages will still be relevant to the user’s queries, but you’ll also have an opportunity to improve them.

Blog posts usually carry the most authority and gain the most traffic, so these are the most vulnerable to a traffic drop from content changes. Make sure you have a plan and process in place for content migration and communicate with your team before the redesign starts.

3. Review previous website 301 redirects

An often-overlooked aspect of SEO and redesign, even from SEO agencies, is 301 redirects.

They take care of the new 301 redirects but fail to review the previous website 301s that can still impact the website rankings.

Importing both of these redirects and ensuring there are no redirect chains or loops is a key step to distribute link juice in the right way.

It’s important to note that it’s better to try to minimize 301 redirects and preserve current URL structure as much as possible. Not every 301 redirect will pass 100% of its authority to another page.

One common issue that can occur here is if you’re switching from https:// to https://www, or vice versa.

Always try to keep your current domain URL structure and only change it if you are still using HTTP.

4. Compare current indexed pages vs. new website pages

Just before the new website is launched, perform a site search using the following search operator: site:yourwebsite.com in Google. Map out all the indexed pages. Then, compare them to your new website URL structure, identify 404 pages, and apply 301s for those pages.

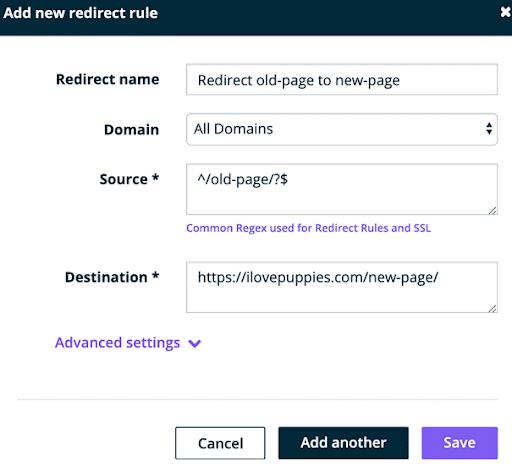

5. Make a plan for new 301 redirects

Make a plan to implement new 301 redirects that would cover all the issues from above, then test them to ensure they’re working.

Consider the following when creating your new 301 redirects plan:

Identify pages with the most backlinks

We recommend using Ahrefs or SEMRush in order to find out which are the top performing pages based on the number of backlinks and referring domains. Make sure to map these pages and if they will no longer continue to exist after the redesign, make sure to apply a redirect to the most relevant or similar page.

Avoid too many redirects, such as from page A to page B to page C to page D. Instead, redirect page A to page D. This will preserve your overall authority and the link juice you get from backlinks.

Make sure there are no redirect chains or redirect loops. These send a user to another page, which then redirects again, or sends users to a redirect page and immediately redirects them to the original page.

With a redirect chain, the visitor may eventually get where they want to go, but it takes much longer to get there and may cause a bounce.

With a redirect loop, they’re unable to navigate out of the loop.

Make sure there are no 404 pages. These errors can harm your brand and impact your SEO by spending more of the crawl budget on non-existing pages vs existing pages and also in creating a frustrating user experience. Overall these pages leave a bad impression of your website.

404 errors occur if you’ve deleted or removed pages from your site without redirecting the URLs properly, or if you’ve relaunched or transferred your domain without redirecting all your URLs to the new site.

Tools like Screaming Frog can help you find broken links and 404 errors quickly using a site audit.

8. Extract meta information

Prior to the redesign, make sure to have the entire previously optimized meta information downloaded on a safe space, this includes the following:

- H1 headings

- Meta titles

- Meta descriptions

Then, when you’re creating your new site and pages, you have all of your on-page elements ready to include to minimize a traffic drop.

9. Migrate structured data markup

If the website previously had structured data markup on the pages, make sure you migrate it to your new site.

If your previous website was using a plugin that automatically creates the structured data markup such as Yoast or Structured Data & Amp for WordPress, just make sure these are installed and properly configured.

Discovering & fixing what caused your traffic to drop after a website redesign

So, your website has lost traffic due to a website redesign, in order to fix the issue you need to discover what exactly happened and understand how to fix it.

First of all, it’s important that you’re sure the website redesign has affected your traffic. Sometimes, it will coincide with a Google Algorithm update.

New google algorithm updates

Google updates its algorithms often, and though some of the changes are announced, no one really knows all the details. Sometimes a drop in traffic could simply be related to your industry and an algorithm update. This happens often as there are a lot of updates going out.

To check and confirm this, you can check other competitor websites that are in the same industry on SEMRush or Ahrefs for specific time frames and see if they saw a drop in traffic. If so, there’s a good chance it’s due to the update and no cause for immediate concern.

You can also use sensors from SEMRush to see what the latest algorithm updates were and which industry they affected the most. If it includes yours, there’s a good possibility that that’s why you’ve lost traffic.

Check your competitor’s website in the same industry to see if they saw a drop in traffic.

If you’ve reviewed the performance of your competitors in the search results and you seem to be the only one who has dropped in traffic, continue with our recommended steps to discover and fix the issues.

Based on the most common causes for a traffic drop, it’s important to identify if the issue is coming from:

- Domain change

- Architecture change

- Content changes

- On-page optimization changes

- Technical issues

Tools you’ll need to figure this out:

- Google search console

- A website crawler, such as ScreamingFrog

Using Google search console indexing and crawling reports data can help you discover and find which pages were affected by the website redesign.

With ScreamingFrog, you can find broken links, 404 pages, missing title tags, meta descriptions, and heading tags.

Check the following to discover what changes of the redesign affected your rankings and how to fix them.

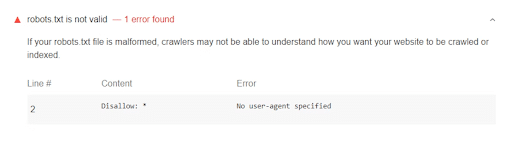

1. Robots.txt file

Your site could be blocking search engines from crawling in the robots.txt file. Developers sometimes leave robots.txt files unchanged after migrating from a development or staging website, often accidentally.

Check your site’s robots.txt files to ensure the following rule isn’t present:

Make sure you are not blocking search engines from crawling your site by disallowing all search engine bots (represented by the asterisk)

If it is, you will need to remove the disallow rule and resubmit your robots.txt file through Google Search Console and the robots.txt tester to correct it.

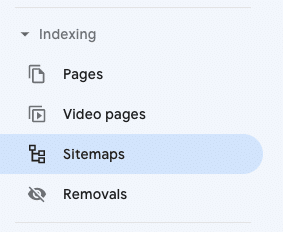

2. Sitemap configuration

The sitemap is a blueprint of your website that helps search engines find, crawl, and index all of the content, as well as denoting which pages are most important.

If your pages are properly linked, the bots can crawl and discover most of your site. You don’t strictly need a sitemap, but it certainly won’t hurt your efforts.

Usually, you can check if your website has a sitemap by adding sitemap.xml to the end of the domain. If your domain is test.com, you can type test.com/sitemap.xml into your browser to check.

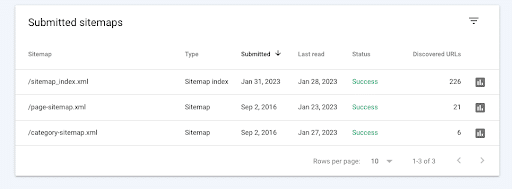

You can also see if you have a sitemap submitted in Google Search Console under the “indexing” section of your Google Search Console dashboard:

If there’s a sitemap submitted, check the sitemap and see if any pages are missing.

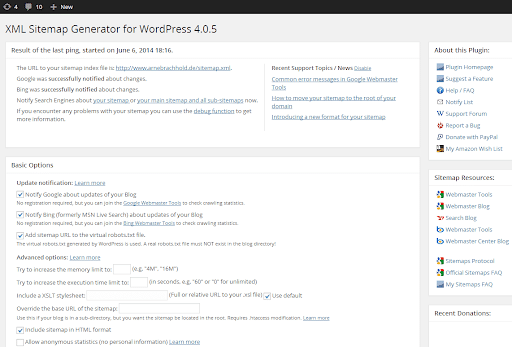

If you don’t have one already, you can use Google XML Sitemaps to create your sitemap.

You can use Google XML Sitemap Generator for WordPress to create a sitemap.

However, if you’re using WordPress, we highly recommend you install Yoast and the plugin will create the sitemap automatically for you.

Go over your sitemap manually to see if all the pages are included. If everything looks good, you can submit your sitemap log to your Google Search Console account.

After Google crawls it, you can review your sitemap in Submitted Sitemaps to verify. If Google has successfully crawled your sitemap, you will see “Sitemap index processed successfully.”

From here, you can also click on the Coverage Report for your sitemap to see the report and determine how many URLs Google found and how many of those pages ended up in the index.

Any excluded URLs should have a reason attached to them, such as duplicate content, so you can fix or remove them.

3. Not found pages (404’s)

You can find a list of all 404 pages in Google Search Console by using Diagnostics > Crawl Errors. If you click on “Not Found,” a list of the URLs that have a 404 error will show.

There are several things that can result in 404 pages, but URL and site architecture changes are the most common. The best way to fix this is with 301 redirects.

If you’re permanently moving a page to a new URL, deleting pages without an alternative, changing your site structure, or migrating your site to a new domain, 301 redirects are the solution. The way you implement a 301 redirect depends on your server and the content management system (CMS) you use, however.

For example, WordPress redirects are simple and straightforward. If you have a WordPress website, you can use a plugin like Yoast SEO Premium or Redirection. You could also apply redirect rules straight from the hosting.

If you’re struggling with redirects, Glide can help you manage your CMS and provide WordPress support for your website redesign.

4. Crawling errors

Crawl errors occur when a search engine tries to reach a page on your website but fails. The bot crawls the pages, indexes the content for use, and adds all the links on these pages to the pages that still have to be crawled. A well-designed website ensures that the bot can get to all the pages on the site, and if it can’t, that’s a crawl error.

Google divides crawl errors into:

- Site errors like server errors, DNS errors, or robots errors

- URL errors like soft 404 errors, submitted URLs, or blocked pages from the robots.txt file

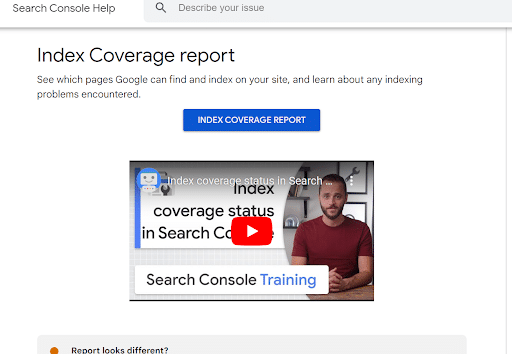

You can find them using the Search Console’s Index Coverage Report to check for any URLs with an error.

Use Google Search Console’s Index Coverage Report to check for any crawl or indexing errors.

5. Relevant schema

Websites often lose traffic after a website redesign because search engines fail to interpret their purpose.

Each page on the website should have a proper and relevant schema, which helps bots index the content on the page.

When you’re doing a redesign, make sure to review the schema markup, especially if you’re manually editing your website code. You can do this with a structuring data tool once your website is live.

6. Page speed

Page speed is one of the most important factors in your site’s performance. It not only enhances the user experience, but it can boost your search engine rankings.

Several factors influence page speed, such as:

- The number of images, videos, and other media files on the page

- The themes and plugins you have installed

- The site’s coding and server-side scripts

The Google PageSpeed Insights tool is easy to use and can measure and test the speed of your pages on both desktop and mobile devices. In addition, as a Google tool, it’s measuring against the search engine’s own performance benchmarks.

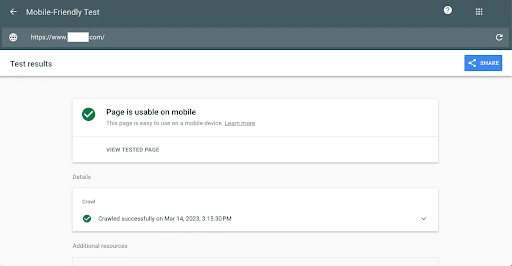

7. Mobile friendliness

The improvement in mobile devices and the availability of fast internet has shifted internet users from desktop to mobile. In the past year, mobile users’ share increased by 5%, and in 2022, 50% of B2B inquiries were placed on mobile. In addition, mobile devices generate 60.66% of website traffic.

A mobile-friendly website is no longer optional or “nice to have.” If you can’t provide a good experience to mobile users, you could drive away a lot of traffic.

Fortunately, Google also has a tool to test your site’s mobile friendliness quickly and easily. It considers the factors in a mobile-friendly site with recommendations and a screenshot of how your site or page looks on a smartphone screen.

Though the tool will provide specific recommendations, generally, mobile-friendly websites are:

- Responsive

- Easy to navigate

- Avoid a lot of pop-ups

- Fast

- Simple

- Clickable elements are not too close together

Other factors that affect mobile friendliness include the button sizes and the font sizes. They should both be large enough to work effectively on mobile and provide a positive user experience.

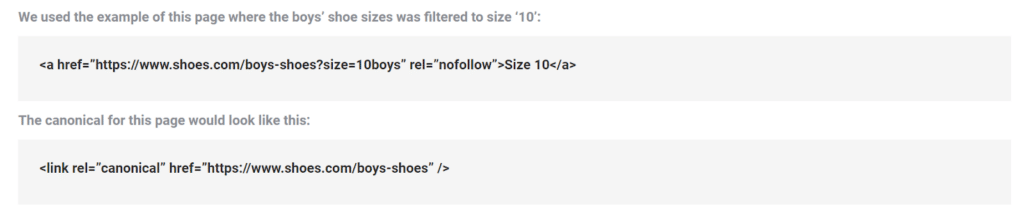

8. Search filters & other URL filtering options

Search filters and other filters can improve the experience for the user to match their intent. But in different industries, these URLs can look pretty similar. The filter pages follow the chosen filter values, and the number of URLs knows no bounds.

If those filters aren’t indexed properly, filter pages can lead to duplicate content that makes it difficult for search engines to determine the relevant pages for related searches. This impacts SEO and diminishes visibility in the search results pages.

Overall, less is more when it comes to indexing filter pages. Canonical URLs refer to the use of the parameter rel=”canonical” to signal which content is original and which is replicated and shouldn’t be indexed.

You could also fix this issue with nofollow tags instead of the noindex tag (rel=”noindex”), which instructs the bots not to index a certain page.

There are issues with the noindex tag, since it will exclude a page from the results pages despite good traffic. Nofollow tags tell the bots not to follow the filter page to the full URL, but the filter still gets indexed.

Here’s an example from Conversion Giant:

9. Canonical tags

As mentioned, canonical tags indicate which content is original and which content is duplicate. Correct canonical tag implementation ensures that multiple versions of the page (duplicates) don’t get indexed separately.

If pages with duplicate content have a self-referencing canonical tag, Google will choose which URL has the “original” content and choose this as the canonical for all of the pages with the duplicate content, and it may not be the one you want. Implement or review canonical tags to ensure you’re getting the indexing you want.

You can check your canonical tags for each page of your website by crawling the website with a crawler like ScreamingFrog.

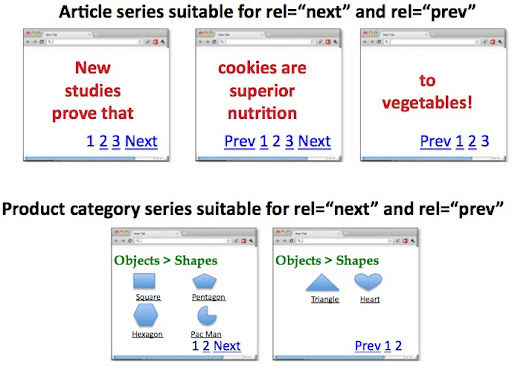

10. Pagination

Large websites need pagination to keep things organized. If it’s done well, it won’t hurt your SEO, but it can have a big impact if you don’t do it properly.

Though infinite scroll provides a better user experience, Google bots can’t scroll or “load more.” Pagination treats each page as a separate entity, so the bot crawls them like they do with any other content.

In the past, Google handled pagination with rel=prev and rel=next link elements, which appear as “Prev” and “Next” to users navigating the content. Including these tags in the page’s code helped Google understand which pages were part of the series and which page should appear in the most relevant searches.

Add self-referencing canonical tags to your paginated pages.

Recently, Google announced that it was dropping those linking elements. Now, they’re treated like any standard, individual, and unique page in the index.

The biggest downside of pagination with SEO is duplicate content. With improper canonical tags, paginated pages and the “view all” option may create duplicate content. To avoid this, all of your paginated pages should have a self-referencing canonical tag.

11. On-page optimization

Sometimes, on-page SEO issues can lead to a sudden drop in traffic after your website redesign. Here are some questions to ask:

Was the previous meta information migrated?

Even with the search engines changing meta information in the SERP based on different search terms, sometimes, the meta titles, descriptions and image alt text will not change with them.

If the meta information wasn’t migrated, it can have a huge impact on your CTR, resulting in fewer clicks to the page and a loss of its previously stable position.

How were previous internal links inside of the content linked compared to now?

Sometimes, the URLs inside of the content may stay with the wrong links as the content is being migrated, but many people don’t pay attention to the previous internal links that may no longer exist.

It’s best to do a find and replace to find and update such internal links.

Were there changes to the previous H1 and the other page heading structures?

If so, those changes could have affected the traffic to the site. Headers help Google understand the content and make it more user-friendly because it’s more readable.

Are pages using duplicate content?

After a redesign, there can be specific sections on every page that have duplicate content. Consider making it unique for every single page.

You can use ScreamingFrog to find any anomalies with page titles, meta descriptions, heading tags, image alt text and duplicate content.

Going through these common causes of traffic drop after a website redesign is just a good practice. Though it may be time consuming, you can get ahead of any problems before they significantly impact your site’s ranking and traffic.

Final thoughts and bonus tips from the SEO team

Things to remember and additional tips from our SEO team on how to avoid traffic drops during a website redesign:

Keep URL site structure changes to a minimum

If you can’t keep URL site structure changes to a minimum, make sure that they have proper redirects in place.

It is not recommended to constantly apply 301 redirects as they never pass 100% of the current page authority.

With each URL change Google has to go through the process of deindexing the current page and indexing a new page, which can have an impact on traffic for a couple of weeks or months.

By keeping the same URL we avoid this and organic traffic will stay the same.

Make sure the sitemap is up to date

Once the website is published, make sure that the sitemap is updated with any new urls if the URL structure changed.

Sometimes sitemaps can still have the http URL versions in it, this is mostly automated with plugins in WordPress, but if you are on a different CMS this is something that needs to be checked.

Don’t forget to migrate on-page elements

To avoid losing rankings and traffic on previously optimized pages, make sure that all target keywords and on-page elements are migrated, including structured data markup.

Work on a staging site

Always work on a staging website until you have ensured that everything is working properly; then push changes to the live website.

Store website backups

Always keep the previous website backup just in case something goes wrong. You’ll have it available to compare to the new one, this can help you identify issues more quickly.

Sometimes there can be big website traffic drop, a backup can help you revert to the original website quickly until you can figure out what the issue was and fix it.

Make sure the website accessible and indexable

As basic as this might seem, this is often overlooked. Make sure that your website is not being blocked through the robots.txt file and that it doesn’t have a “noindex” tag.

Check for dummy text

Make sure that pages are not using dummy text. Sometimes pages will end up with dummy text which can cause duplicate and irrelevant content.

Frequently Asked Questions

Still have questions? We have answers.

Why did my organic traffic drop after a website redesign?

Many factors can affect your organic traffic, including the changes you’ve made to your content, a drop in rankings, or a recent Google update. Our guide should help you narrow down the likely cause.

How do you restore organic traffic after a website redesign?

Restoring organic traffic depends on what caused it in the first place. You need to audit your site with our recommendations provided in this guide to determine the cause and apply the fix.

Does changing website URL or slug affect SEO and ranking?

Yes, URL slugs are vital to SEO and affect user experience. As search engines use the URLs to index a page, whenever you change URLs or slugs, search engines see that as a completely new page.

Will changing the WordPress theme affect website SEO?

Changing a WordPress theme can impact rankings, but the effect depends on how significant the change is. Your theme impacts your site speed, design, and content formatting, all of which have an impact on SEO, so it’s vital to approach these changes strategically.

Still Having Issues & Concerns?

Your website traffic suddenly dropped after a website redesign and you are not sure what to do? Follow our guide and identify the issue before it is too late. If you still need help, reach out to Glide Design SEO specialists today for an SEO audit and consultation!